Remote Sensing for Physical Geography

Another important geographic technique for acquiring spatial information is remote sensing. This term refers to gathering information from great distances and over broad areas, usually through instruments mounted on aircraft or orbiting spacecraft. These instruments, or remote sensors, measure electromagnetic radiation coming from the Earth's surface and atmosphere as received at the aircraft or spacecraft platform. The data acquired by remote sensors are typically displayed as images—photographs or similar depictions on a computer screen or color printer—but are often processed further to provide other types of outputs, such as maps of vegetation condition or extent, or of land-cover class. Information obtained can range from fine local detail—such as the arrangement of cars in a parking lot—to a global-scale picture—for example, the “greenness” of vegetation for an entire continent. As you read this textbook, you will see many examples of remote sensing, especially images from orbiting satellites.

All substances, whether naturally occurring or synthetic, are capable of reflecting, transmitting, absorbing, and emitting electromagnetic radiation. For remote sensing, however, we are only concerned with energy that is reflected or emitted by an object and that reaches the remote sensor. For remote sensing of reflected energy, the Sun is the source of radiation in many applications. As we will see in Chapter 2, solar radiation reaching the Earth's surface is largely in the form of light energy that includes visible, near-infrared, and shortwave infrared light. Remote sensors are commonly constructed to measure radiation reflected from the Earth in all or part of this range of light energy. For remote sensing of emitted energy, the object or substance itself is the source of the radiation, which is related largely to its temperature.

COLORS AND SPECTRAL SIGNATURES

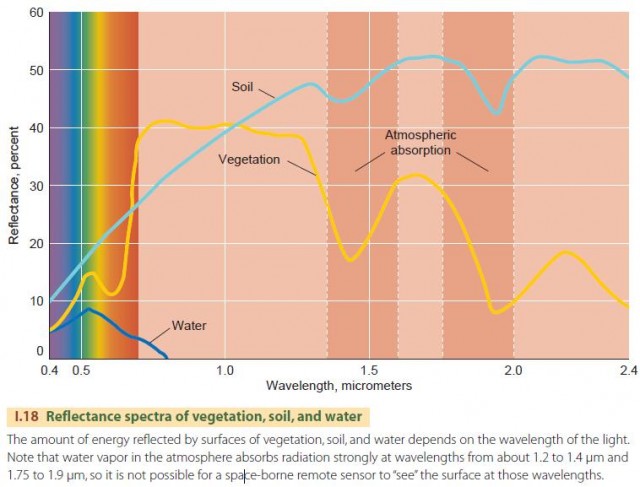

Most objects or substances at the Earth's surface possess color to the human eye. This means that they reflect radiation differently in different parts of the visible spectrum. Figure I.18 shows how the reflectance of water, vegetation, and soil varies with light ranging in wavelength from visible to shortwave infrared. Water surfaces are always dark but are slightly more reflective in the blue and green regions of the visible spectrum. Thus, clear water appears blue or blue-green to our eyes. Beyond the visible region, water absorbs nearly all radiation it receives and so looks black in images acquired in the near-infrared and shortwave infrared regions.

Vegetation appears dark green to the human eye, which means that it reflects more energy in the green portion of the visible spectrum while reflecting somewhat less in the blue and red portions. But vegetation also reflects very strongly in near-infrared wavelengths, which the human eye cannot see. Because of this property, vegetation is very bright in near-infrared images. This distinctive behavior of vegetation—appearing dark in visible bands and bright in the near-infrared—is the basis for much of vegetation remote sensing, as we will see in many examples of remotely sensed images throughout this book.

The soil spectrum shows a slow increase of reflectance across the visible and near-infrared spectral regions and a slow decrease through the shortwave infrared. Looking at the visible part of the spectrum, we see that soil is brighter overall than vegetation and is somewhat more reflective in the orange and red portions. Thus, it appears brown. (Note that this is just a “typical” spectrum—soil color can actually range from black to bright yellow or red.)

We refer to the pattern of relative brightness within the spectrum as the spectral signature of an object or type of surface. Spectral signatures can be used to recognize objects or surfaces in remotely sensed images in much the same way that we recognize objects by their colors. In computer processing of remotely sensed images, spectral signatures can be used to make classification maps, showing, for example, water, vegetation, and soil.

THERMAL INFRARED SENSING

While objects reflect some of the solar energy they receive, they also emit internal energy as heat that can be remotely sensed. Warm objects emit more thermal radiation than cold ones, so warmer objects appear brighter in thermal infrared images. Besides temperature, the intensity of infrared emission depends on the emissivity of an object or a substance. Objects with higher emissivity appear brighter at a given temperature than objects with lower emissivities. Differences in emissivity affect thermal images. For example, two different surfaces might be at the same temperature, but the one with the higher emissivity will look brighter because it emits more energy.

Some substances, such as crystalline minerals, show different emissivities at different locations in the thermal infrared spectrum. In a way, this is like having a particular color, or spectral signature, in the thermal infrared spectral region. In Chapter 11 we will see examples of how some rock types can be distinguished and mapped using thermal infrared images.

RADAR

There are two classes of remote sensor systems: passive and active. Passive systems acquire images without providing a source of wave energy. The most familiar passive system is the camera, which uses electronic detectors or photographic film to sense solar energy reflected from the scene. Active systems use a beam of wave energy as a source, sending the beam toward an object or surface. Part of the energy is reflected back to the source, where it is recorded by a detector.

Radar is an example of an active sensing system that is often deployed on aircraft or spacecraft. Radar systems in remote sensing use the microwave portion of the electromagnetic spectrum, so named because the waves have a short wavelength compared to other types of radio waves. Radar systems emit short pulses of microwave radiation and then “listen” for a returning microwave echo. By analyzing the strength of each return pulse and the exact time it is received, an image is created showing the surface as it is illuminated by the radar beam.

Radar systems used for land imaging emit microwave energy that is not significantly absorbed by water. This means that radar systems can penetrate clouds to provide images of the Earth's surface in any weather. In contrast, ground-based weather radars use microwaves that are scattered by water droplets or ice crystals and produce an image of precipitation over a region. They detect rain, snow, and hail and are used in local weather forecasting.

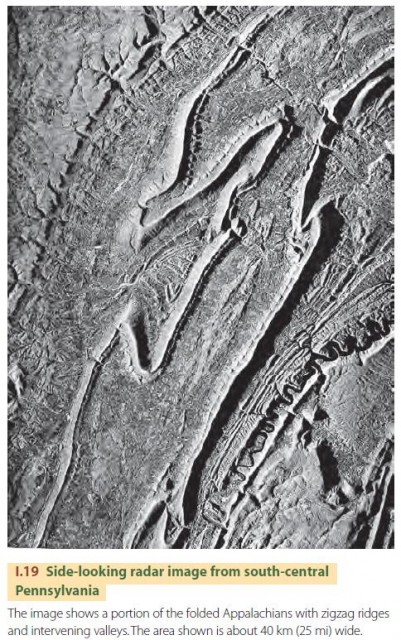

Figure I.19 shows a radar image of the folded Appalachian Mountains in south-central Pennsylvania. It is produced by an air-borne radar instrument that sends pulses of radio waves downward and sideward as the airplane flies forward. Surfaces oriented most nearly at right angles to the slanting radar beam will return the strongest echo and therefore appear lightest in tone.

In contrast, those surfaces facing away from the beam will appear darkest. The effect is to produce an image resembling a three-dimensional model of the landscape illuminated at a strong angle. The image shows long mountain ridges running from upper right to lower left and casting strong radar shadows to emphasize their three-dimensional form. The ridges curve and turn sharply, revealing the geologic structure of the region. Between the ridges are valleys of agricultural land, which are distinguished by their rougher texture in the image. In the upper left is a forested plateau that has a smoother appearance.

DIGITAL IMAGING

Modern remote sensing relies heavily on computer processing to extract and enhance information from remotely sensed data. This requires that the data be in the form of a digital image. In a digital image, large numbers of individual observations, termed pixels, are arranged in a systematic way related to the Earth position from which the observations were acquired.

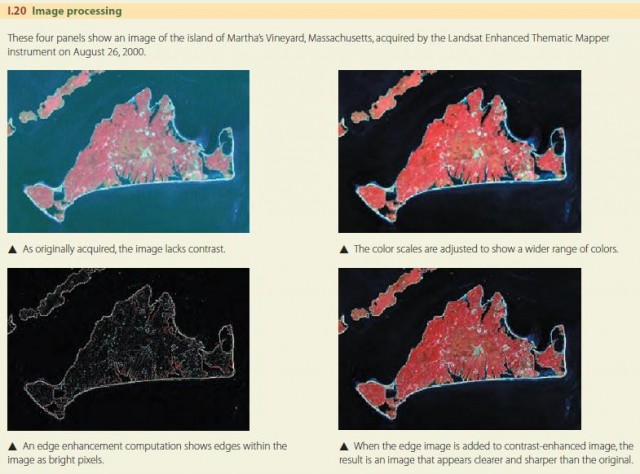

The great advantage of digital images over photographic images is that they can be processed by computer, for example, to increase contrast or sharpen edges (Figure I.20). Image processing refers to the manipulation of digital images to extract, enhance, and display the information that they contain. In remote sensing, image processing is a very broad field that includes many methods and techniques for processing remotely sensed data.

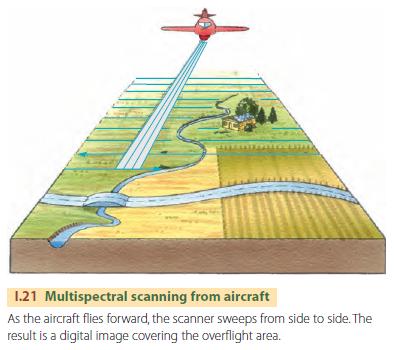

Many remotely sensed digital images are acquired by scanning systems, which may be mounted in aircraft or on orbiting space vehicles. Scanning is the process of receiving information instantaneously from only a very small portion of the area being imaged (Figure I.21). The scanning instrument senses a very small field of view that runs rapidly across the ground scene. Light from the field of view is focused on a detector that responds very quickly to small changes in light intensity. Electronic circuits read out the detector at very short time intervals and record the intensities. Later, the computer reconstructs a digital image of the ground scene from the measurements acquired by the scanning system.

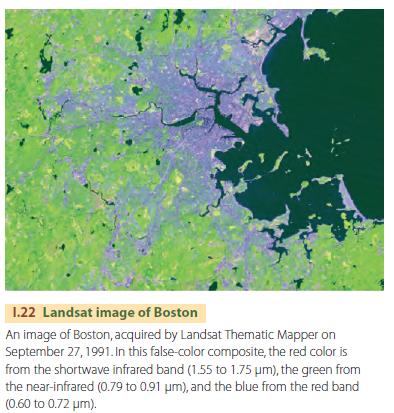

Most scanning systems in common use are multispectral scanners. These devices have multiple detectors and measure brightness in several wavelength regions simultaneously. An example is the Thematic Mapper instrument used aboard the Landsat series of Earthobserving satellites. This instrument simultaneously collects reflectance data in seven spectral bands. Six wavebands sample the visible, near-infrared and shortwave infrared regions, while a seventh records thermal infrared emissions. Figure I.22 shows a color composite of the Boston region acquired by the Landsat Thematic Mapper. The image uses red, near-infrared, and shortwave infrared wavebands to show vegetation in green, beaches and bare soils in pink, and urban surfaces in shades of blue.

An alternative to scanning is direct digital imaging using large numbers of detectors arranged in a two-dimensional array. This technology is in common use in digital cameras and is also used in some imagers on spacecraft. The array has millions of tiny detectors arranged in rows and columns that individually measure the amount of light they receive during an exposure. Electronic circuitry reads out the measurement made by each detector, composing the entire image rapidly. Advanced digital cameras now record detail as finely as film cameras.

ORBITING EARTH SATELLITES

With the development of orbiting Earth satellites carrying remote sensing systems, remote sensing has expanded into a major branch of geographic research. Because orbiting satellites can image and monitor large geographic areas or even the entire Earth, we can now carry out global and regional studies that cannot be done in any other way.

Most satellites designed for remote sensing use a Sun-synchronous orbit. As the satellite circles the Earth, passing near each pole, the Earth rotates underneath it, allowing all of the Earth to be imaged after repeated passes. The orbit is designed so that the images of a location acquired on different days are taken at the same hour of the day. In this way, the solar lighting conditions remain about the same from one image to the next. Typical Sunsynchronous orbits take 90 to 100 minutes to circle the Earth and are located at heights of about 700 to 800 km (430 to 500 mi) above the Earth's surface. Another orbit used in remote sensing is the geostationary orbit. Instead of orbiting above the poles, a satellite in geostationary orbit constantly revolves above the equator. The orbit height, about 35,800 km (22,200 mi), is set so that the satellite makes one revolution in exactly 24 hours in the same direction that the Earth turns. Thus, the satellite always remains above the same point on the Equator. From its high vantage point, the geostationary orbiter provides a view of nearly half of the Earth at any moment.

Geostationary orbits are ideal for observing weather, and the weather satellite images readily available on television and the Internet are obtained from geostationary remote sensors. Geostationary orbits are used by communications satellites. Since a geostationary orbiter remains at a fixed position in the sky for an Earthbound observer, a high-gain antenna can be pointed at the satellite and fixed in place permanently, providing high-quality, continuous communications. Satellite television systems also use geostationary orbits. Remote sensing is an exciting, expanding field within physical geography and the geosciences in general. As you read the rest of this text, you will see many examples of remotely sensed images.